From Model Training to Real Business Impact

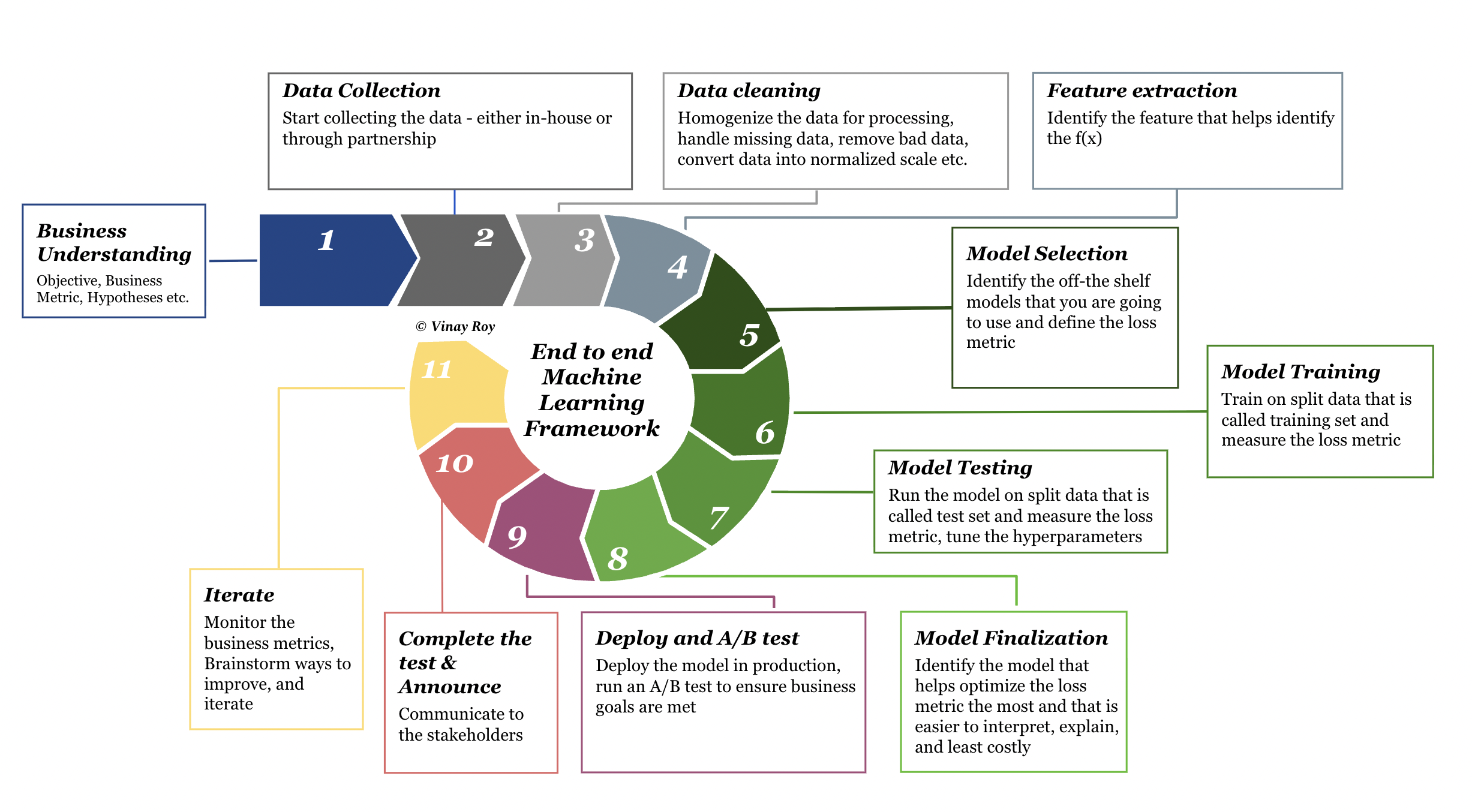

In the previous chapters, we walked through the core stages of building a machine learning model: defining the business objective, collecting data, cleaning it, extracting useful features, selecting candidate algorithms, and training and testing those models.

At that point, many learners assume the work is essentially complete. The model has been trained, the evaluation metrics look strong, and the team has identified a “best-performing” approach.

However, in real organizations, the majority of effort and risk lies beyond model training.

A machine learning model that performs well in a controlled environment does not automatically translate into a successful AI system in production. The difference between a promising experiment and a valuable deployed solution depends on the remaining steps of the machine learning lifecycle: finalizing the model, deploying it safely, validating its business impact, communicating results to stakeholders, and continuously monitoring and improving the system over time.

This chapter focuses on these remaining steps, what happens after training and testing, and what it takes for machine learning to become a durable organizational capability.

After training and evaluating several models, most teams arrive at a shortlist of candidates. It is rarely the case that one model is universally superior across every metric. Instead, different models tend to excel along different dimensions.

For example:

This is why the process of model finalization is not simply a matter of choosing the model with the highest accuracy or lowest loss.

Model finalization is the stage where organizations decide which model is most appropriate for deployment, based on a combination of predictive performance and practical constraints.

The first requirement is that the model performs well on evaluation metrics aligned with the business problem.

For regression problems, this may involve metrics such as:

For classification problems, this may involve:

But these metrics are only one part of the decision.

A model that improves RMSE by 3% may not be the best choice if it is fragile, expensive, or impossible to explain.

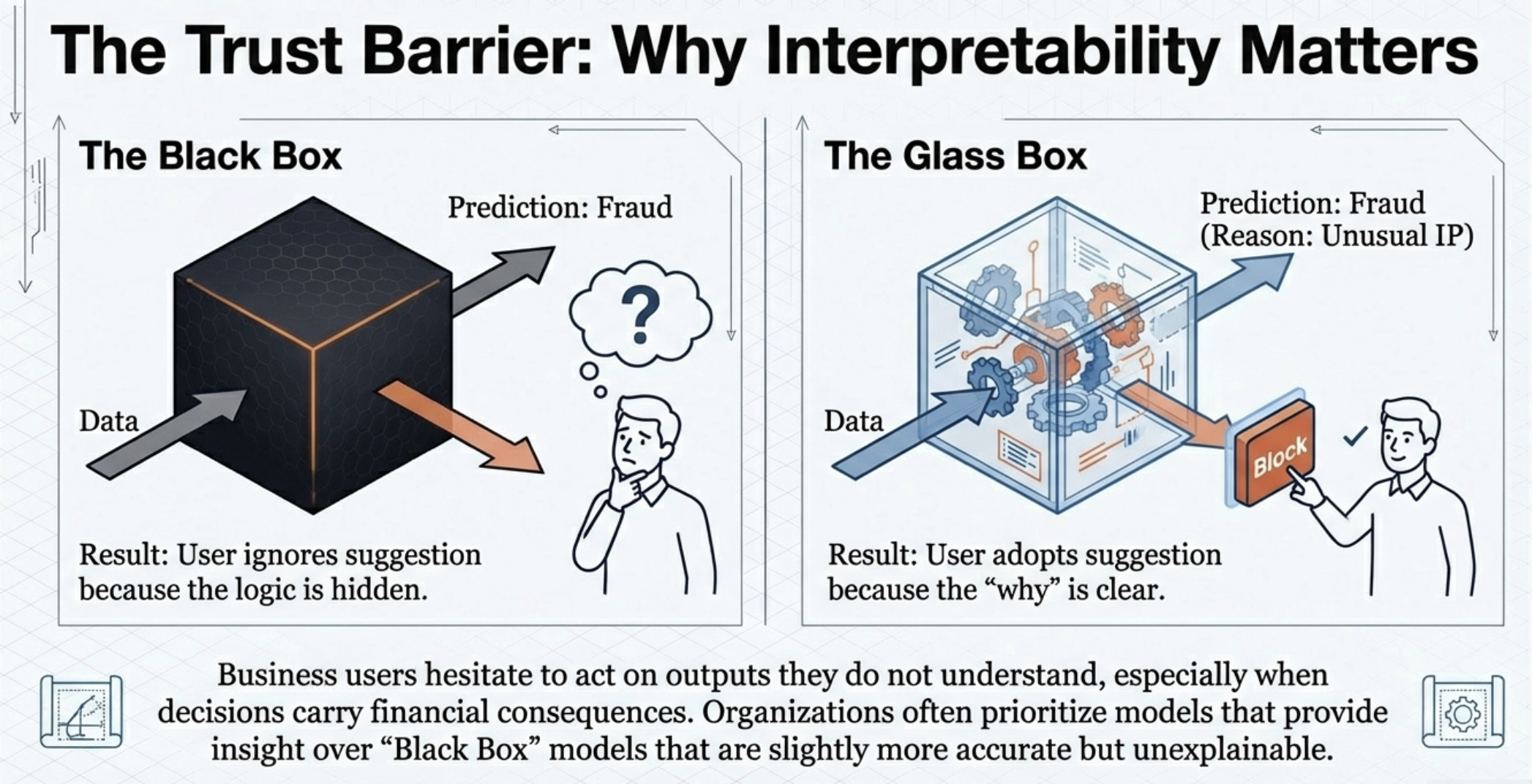

In many business settings, machine learning predictions do not operate in isolation. They influence decisions made by people:

In such environments, interpretability becomes a central requirement.

A highly complex model that produces accurate predictions but cannot be explained often leads to low adoption. Business users may hesitate to act on outputs they do not understand, especially when decisions carry financial or reputational consequences.

As a result, organizations often prefer models that provide insight into why predictions are being made, even if they are slightly less accurate.

Model finalization also requires thinking about the operational realities of production systems.

Key questions include:

For example, a fraud detection model may need to respond in milliseconds. A large neural network may not meet latency requirements, even if it performs well offline.

Thus, the “winning” model is often the one that balances predictive quality with deployability.

Finally, model finalization requires aligning technical optimization with business cost.

Consider two errors:

The business impact of these errors is not symmetric. Therefore, the best model is not the one that maximizes accuracy, but the one that minimizes the most costly failures.

This is why organizations often incorporate cost-sensitive evaluation when selecting the final model.

Once a model is finalized, the next step is deployment.

Deployment is the process of integrating the model into real operational systems so that it can generate predictions in live business workflows.

This is one of the most challenging transitions in machine learning.

A model in a notebook is an analytical artifact. A deployed model is part of a production system.

In practice, deployment involves much more than exporting a trained model file.

A production ML system requires:

For example, deploying a customer churn model requires connecting predictions into CRM systems so that customer success teams can act on them.

Thus, deployment is as much an engineering and product challenge as it is a modeling task.

Even if a model performs well on historical test data, it must still prove its value in the real world.

This is because deployment changes the environment:

Therefore, organizations typically validate deployed models using controlled experiments.

A/B testing is one of the most common approaches for validating real business impact.

In an A/B test:

Teams then measure differences in key outcomes such as:

This ensures that the model is not only statistically accurate, but also economically valuable.

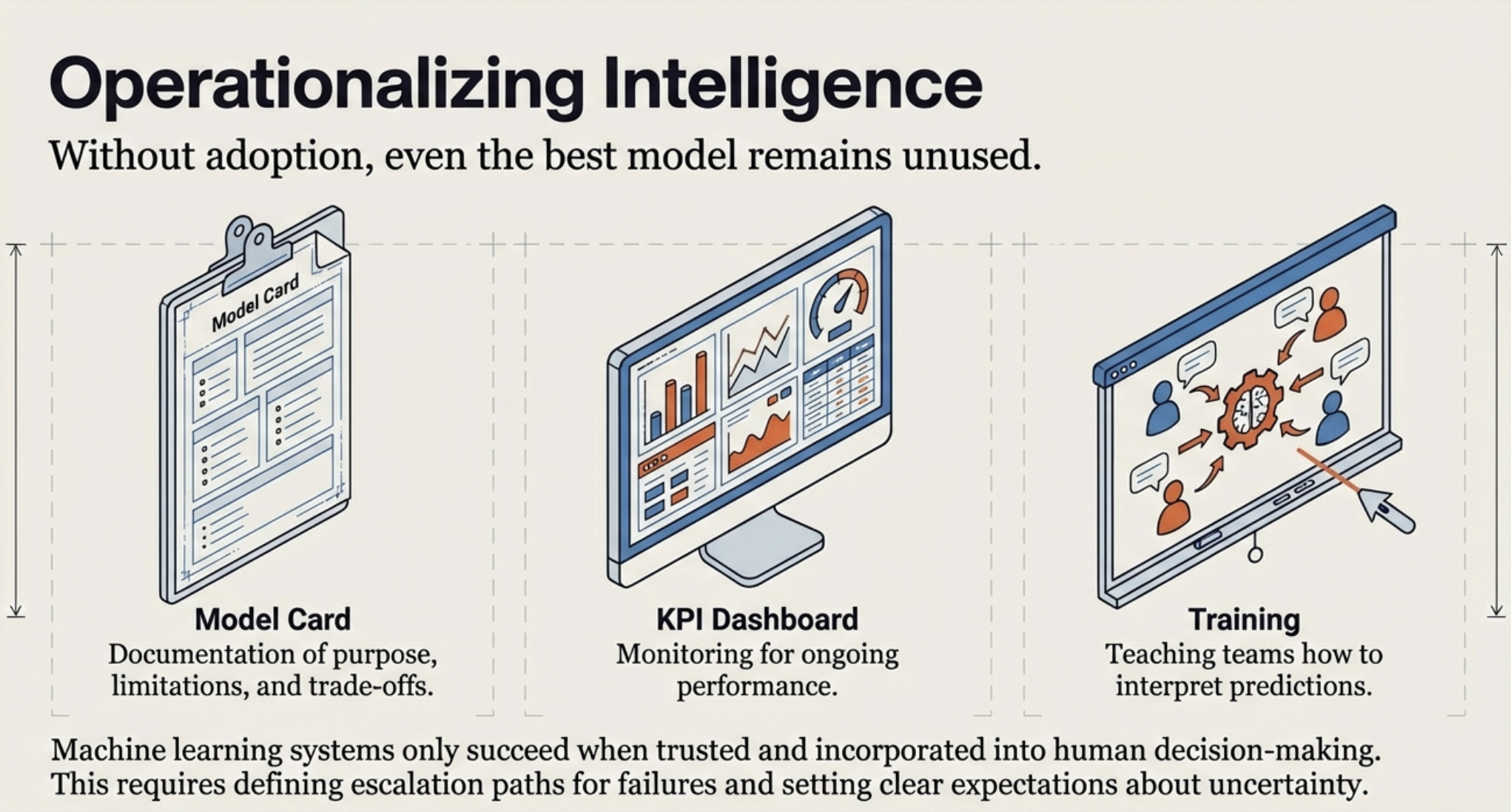

After deployment and testing, the model must be operationalized across the organization.

This step is often underestimated.

Machine learning systems succeed only when they are trusted, understood, and incorporated into decision-making processes.

Organizations therefore invest in communication and stakeholder alignment.

This typically includes:

In mature organizations, this documentation is formalized through artifacts such as model cards, monitoring dashboards, and governance reviews.

Without adoption, even the best model remains unused.

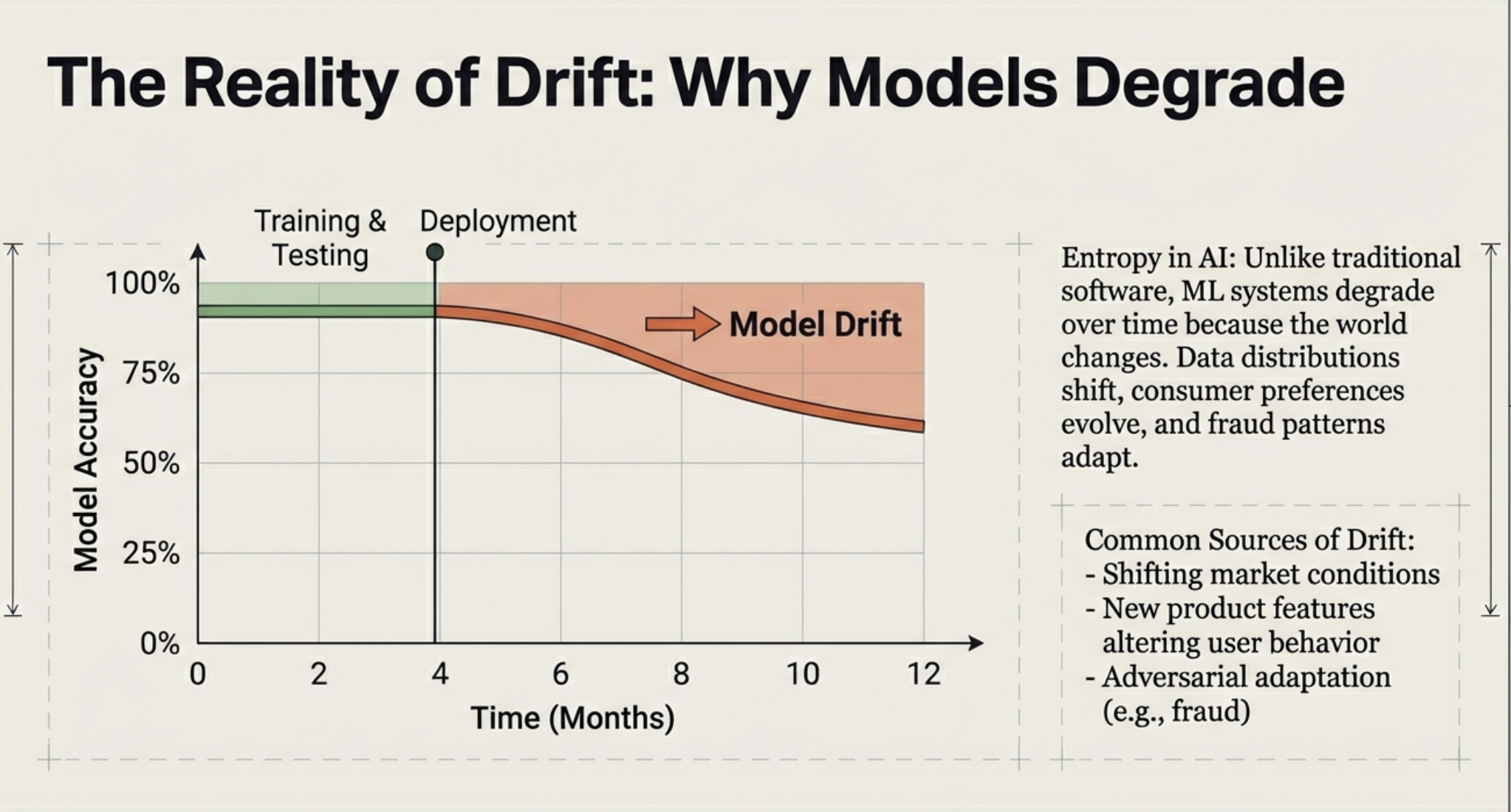

The final step in the framework is iteration.

Unlike traditional software, machine learning systems degrade over time because the world changes.

This is known as model drift.

Examples include:

As a result, organizations must treat machine learning systems as continuously maintained assets rather than one-time deployments.

Production ML systems require ongoing monitoring of:

Monitoring ensures that drift is detected early before significant harm occurs.

Most organizations implement retraining cycles:

Iteration is not a sign of failure, it is the natural requirement of sustaining AI value over time.

The end-to-end machine learning lifecycle extends far beyond training models.

The final stages include:

These steps are where machine learning becomes not just a technical achievement, but a lasting business capability.