At Neuto AI, we have had the privilege and the scars of building machine learning systems in very different organizational contexts, from teams of five searching for product–market fit to global enterprises operating at massive scale. Across these environments, the technologies changed, the stakes increased, and the tooling matured. The core lessons, however, stayed remarkably consistent.

This is what we have learned from shipping systems that had to work in the real world.

Machine learning does not fail because of algorithms. It fails because reality is poorly defined.

The hardest work is not training models. It is deciding what the model should be rewarded for, translating messy human behavior into usable data, and identifying levers that actually move business outcomes. Labels are rarely clean. Feedback is delayed, sparse, or indirect. Proxy metrics often look strong on dashboards but quietly diverge from what the business truly cares about.

Teams that treat machine learning as only a modeling exercise underestimate this complexity. The most successful teams invest early and deeply in reward design, instrumentation, and measurement discipline. Getting this wrong creates systems that optimize the wrong thing very efficiently.

In practice, defining the right objective accounts for much of the eventual success.

Short-term rewards are tempting but incomplete. Long-term rewards matter more but are difficult to attribute. Proxy metrics are fragile and frequently break down at scale. In many production systems, feedback arrives weeks or months later, if it arrives at all.

For this reason, reward engineering is not a technical afterthought. It is a strategic decision that forces alignment between product, business, and data teams. Teams that delay this discussion almost always pay for it later.

One of the most counterintuitive lessons we share with clients is to avoid starting with machine learning.

Heuristics, rules, and SQL queries are not primitive solutions. They are essential baselines. They clarify assumptions, surface edge cases, and create reference points that prevent premature optimization. Many problems do not justify the operational and cognitive cost of machine learning.

If a simple approach delivers most of the value, that is not a failure. It is good engineering.

There are unavoidable trade-offs in machine learning systems.

Precision competes with recall. Exploration competes with exploitation. Accuracy competes with latency and cost. Relevance competes with diversity and serendipity. These are not abstract technical debates. They are product decisions.

High-performing teams are explicit about these trade-offs and align them with the user experience they want to deliver. Poorly performing teams argue about metrics in isolation.

Some problems have hard limits, particularly those involving human behavior.

Search, recommendations, fraud detection, and forecasting all contain irreducible uncertainty. Beyond a certain point, additional effort produces diminishing returns. Pushing past that ceiling only makes sense when conducting foundational research rather than building a product.

Realistic expectations protect teams from burnout and organizations from misallocated investment.

Most machine learning systems fail not because they are incorrect, but because they become outdated.

User preferences shift. Supply changes. Context evolves daily, seasonally, and structurally. Systems that ignore time implicitly assume a static world. That assumption is never valid.

Time awareness, whether through retraining strategies, decay functions, or temporal features, is not an optimization. It is a requirement.

One pattern has become increasingly clear. Teams with strong evaluation frameworks ship faster and with greater confidence.

Good evaluations create trust. They allow teams to iterate, refactor, and upgrade models without fear. They transform intuition into evidence.

No team regrets investing in robust evaluation.

Data by itself has no momentum.

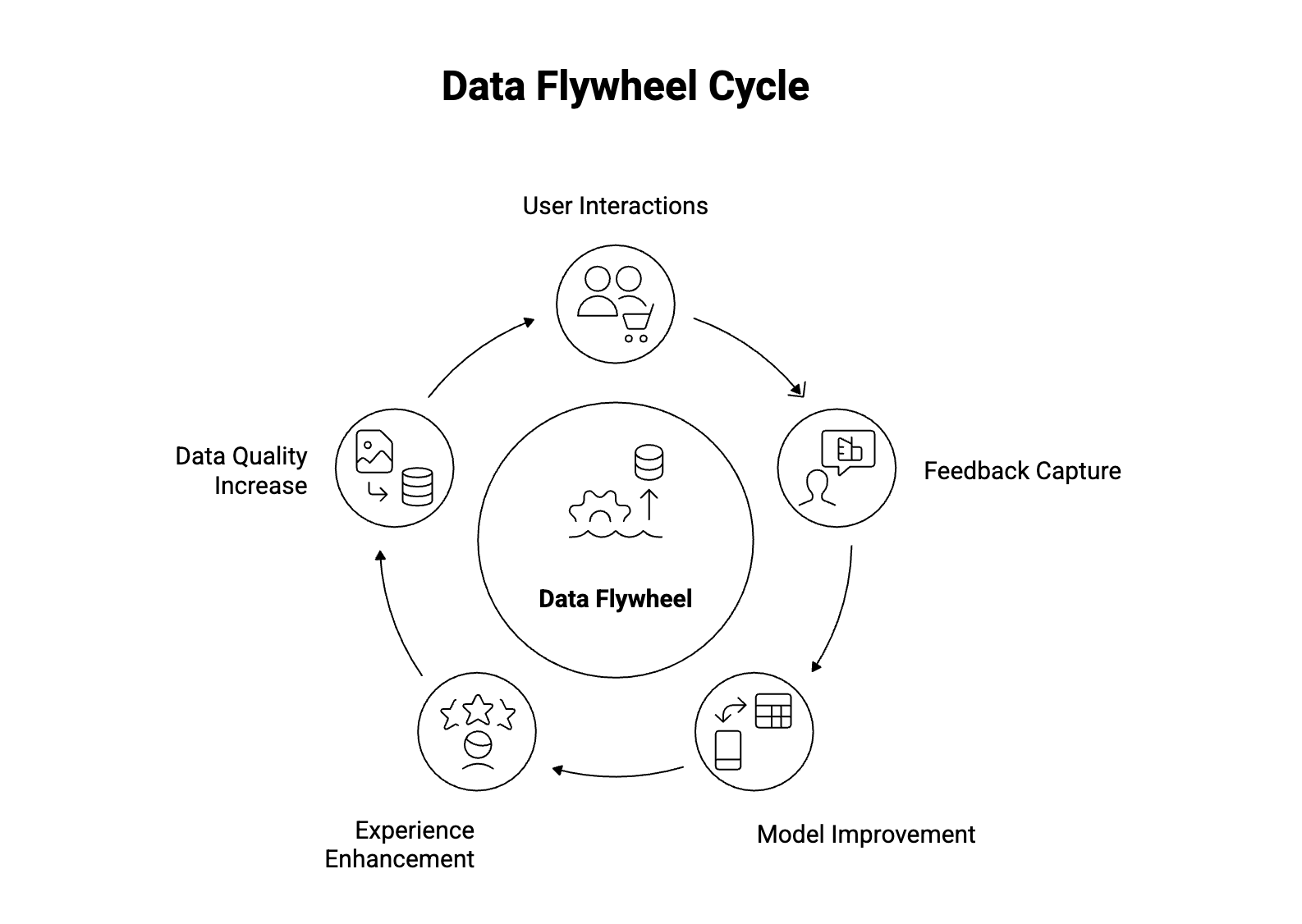

Advantage comes from how quickly feedback is captured, learned from, and reintegrated into the system. The strongest products are designed around a data flywheel where user interactions improve models, models improve experience, and better experience generates higher-quality data.

Speed matters. Feedback loops compound.

With modern large language models, generating content is easy. Evaluating correctness, faithfulness, and safety is significantly harder.

Hallucinations, prompt injection, and silent failures are not edge cases. They are systemic risks. The effort required to detect and mitigate these issues far exceeds the effort required to generate output.

Trust is earned through guardrails, evaluation, and operational discipline, not through demonstrations.

Mature products rarely rely on a single model.

Instead, they use collections of models where smaller, specialized components are orchestrated by larger ones. This modularity improves reliability, interpretability, and adaptability. It also enables independent upgrades.

The model is not the product. The system is.

If a new model release creates anxiety, the system is too tightly coupled.

The pace of model improvement continues to accelerate. Products must be designed to swap models easily and benefit from progress without major rearchitecture. Over time, flexibility consistently outperforms specialization.

General systems outlast clever optimizations.

There is a wide gap between something that works once and something that works reliably.

Scale exposes hidden assumptions. Each order of magnitude increase in traffic reveals new operational, data, and organizational failure modes. Observability, monitoring, and recovery matter as much as model quality.

Production is where most machine learning initiatives fail, not because teams lack intelligence, but because the effort required is underestimated.

For most enterprise and business-to-business use cases, model costs are manageable. For consumer-scale applications, they are nontrivial but still not the primary obstacle.

The true constraints are reliability, security, compliance, and trust. Costs will continue to decline. These challenges will not disappear on their own.

Across organizations and products, the same foundational capabilities repeatedly determine success.

Reliable data pipelines, strong instrumentation, robust evaluation, experimentation frameworks, guardrails, and monitoring are reusable investments. Teams that skip these move quickly at first and slowly forever after.

Complexity always emerges over time. It should never be the starting point.

Systems that begin complex collapse under their own weight. Systems that begin simple can evolve. Whenever possible, teams should ask whether workflows can be asynchronous, batched, or deferred.

Operational restraint is a competitive advantage.

Execution is the discipline of moving from present constraints to a future vision.

It requires iteration, sequencing, compromise, and focus. Speed matters, but patience does as well. Some breakthroughs cannot be rushed.

The strongest teams move quickly where they can and accept slowness where they must.

Alignment, incentives, communication, and culture determine outcomes more than architecture diagrams.

Machine learning systems are built by teams, not individuals. Infrastructure, product, design, data, and business functions all contribute. No single role succeeds in isolation.

Technology is often the easier part.

Some of the most valuable insights come from people who understand customers deeply rather than from research papers.

Frontline operators, domain experts, and business users often detect patterns before dashboards do. Strong teams listen broadly and synthesize deliberately.

What feels obvious to one team can be transformative for another.

Expertise is relative. Teaching reinforces understanding. Communities accelerate progress. The field advances fastest when practitioners share what actually works.

Everything should work backward from the customer.

Machine learning is not the objective. Value is. The strongest products combine ambition with attention to detail. AI does not eliminate work. It reshapes it, creating new problems worth solving.

That is the opportunity.